What does it take to prove a ZK-L2?

Can proving be outsourced without L2s accepting risks and trade-offs?

Interested in earning rewards by running nodes? You can sign up to Rent a Node to begin today. If you’re a project interested in renting ZK compute, start now with credits.

TL; DR

In this article, we dive into what characteristics prover networks and marketplaces should have, to provide the necessary finality guarantees for ZK-L2s. Zac, the co-founder of Aztec has recently posted about third-party prover marketplaces as middlemen that may introduce risks and unhealthy dependencies to the underlying network.

His point was related to the L1, however, the same applies to any L1 or L2 that uses validity proofs for settlement.

We explore a prover network protocol design that aligns well with decentralized protocols and prevents dependencies or central points of failure.

Why do ZK rollups need proofs?

ZK and validity rollups need proofs for chain finality. The process, in a nutshell, is the following:

The sequencer commits the block to the rollup contract on L1.

The proof provider generates the corresponding proof that allows verification of the state transitions.

The proof is submitted to L1 where the verifier contract verifies the proof.

If the proof is valid, i.e. the state transitions in the initial commitment match those included in the proof, the L2 block is settled and reaches finality. If an invalid proof or no proof is delivered, the pending block(s) with their chain activity will be pruned, and transactions will be reorged.

Are auctions part of the general block production in blockchains?

If we look at blockchains and the block production process of PoS networks in general, we see that traditionally they do not utilize auctions for validator/sequencer selection and block building.

The reason is that auctioning out block production would leave too large an attack surface:

If the protocol utilizes an auction where cost is a key factor for selection, i.e. favors entities that build a block for the lowest fee and leaves the most revenue with the protocol, the richest entities could underbid everyone else, and easily become dominant, undermining such core principles as validator decentralization which is key for high liveness and censorship resistance.

Even though sophisticated auctioning mechanisms exist, none have the characteristics that could replace random selection, as the most neutral and reliable selection model to ensure the above goals.

MEV-relays: marketplaces for optimized block-building

Ever since Proposer-Builder Separation (PBS) was introduced to Ethereum, relays, such as Flashbots, have become widely used, facilitating efficient negotiations between the two parties. However, this has led to increased centralization. As per mevwatch.info, currently around two-thirds of Ethereum blocks are built using relays, i.e. block proposers sell the right to compose blocks to specialized block builders who are essentially sophisticated entities specialized in building blocks that yield the highest revenue.

What is the impact of relays?

Centralization:

Around 65-70% of Ethereum blocks are built using relays.

The top five builders cover approx. 90% of blocks built through MEV-relays.

*Source: https://explorer.rated.network/builders?network=mainnet&timeWindow=all&page=1

Censorship:

Over 50% of all Ethereum blocks are censored. (Source: mevwatch.info.)

MEV-relays show that auctions can indeed lead to heavy centralization. This is an important lesson learned, and we should aim to avoid that as much as possible in proof generation.

How do PBS auctions work, and can they be extrapolated to proof auctions?

Following our line of thought, and considering our topic, it is super-important that we take note of how relays work, and how builders and proposers interact:

Builders compile blocks and send them to the relay along with a bid, which is the potential revenue the block proposer on Ethereum can earn. The block content is not yet revealed to ensure proposers cannot steal it and build the same themselves.

The block proposer reviews the bids and accepts the highest (they commit to the bid).

After the block proposer’s commitment, the block content of the accepted bid is revealed to the block proposer, who proposes it as the next block, and the bid gets settled.

If proof generation is decentralized, as mentioned earlier, it is the validator or, as more often called, the sequencer of the ZK-rollup who needs to source a proof for the block they’ve built. If we were to apply the relays’ mechanism to outsourced proof generation then the following process would take place:

Proof providers generate the proof for the block built by the sequencer, submit a commitment proving that they have a valid proof (without revealing the proof itself), and a bid, i.e. how much rewards they ask for in return for the proof.

The sequencer selects the bid and commits to it.

The proof is shared with the sequencer, who submits the proof to L1, the block reaches finality, and the bid gets settled.

What is the impact of a PBS-like auction for proof generation?

Proving an L2 block or epoch requires orders of magnitude heavier compute capacity than sophisticated block building. Therefore the above mechanism would involve a significant amount of wasted compute and a race to the bottom in bids, both of which only rich entities can afford. The result is predictable:

Centralization, and subsequently liveness and censorship risks,

Power to introduce unhealthy economic dynamics, and gain control over the cost of proving.

ZK-L2s require guarantees that auctions cannot fulfill

Until proof generation includes wasted compute, it cannot get super-cheap. Thus, aiming to decrease the cost of proving, the majority of the proving infrastructure landscape opted for removing wasted computation.

Lack of redundancy and centralization risks

Regardless if prover networks and markets apply simple Dutch auctions, or more complex double auction models with sophisticated matching algorithms, there is a single entity selected and matched to each proof request. If that entity fails to deliver the proof, the bidding process needs to start all over, which requires additional action by the sequencer and introduces delays, likely resulting in finality issues and reorgs on the L2 chain. (The question of economic security, and how efficiently it can prevent malicious actors from non-delivery, is not discussed in this blog. We’ve been exploring this in our latest State of the proving infra report for Q4.)

Until cost is a dominant factor in the matching algorithm of any auction, the elevated risk of centralization (and all the side effects that come with it) through prover undercutting, and rich entities becoming dominant remains.

The chain is just as strong as its weakest link

Following the above thought process, it is evident that the reason why auctions are popular is that it is much easier to externalize proving economics, i.e. let the market decide the cost of proving, than to build a protocol that is decentralized, cheap, delivers the execution guarantees that ZK-rollups need, and while being an external entity, does not introduce additional risks to the network being proven.

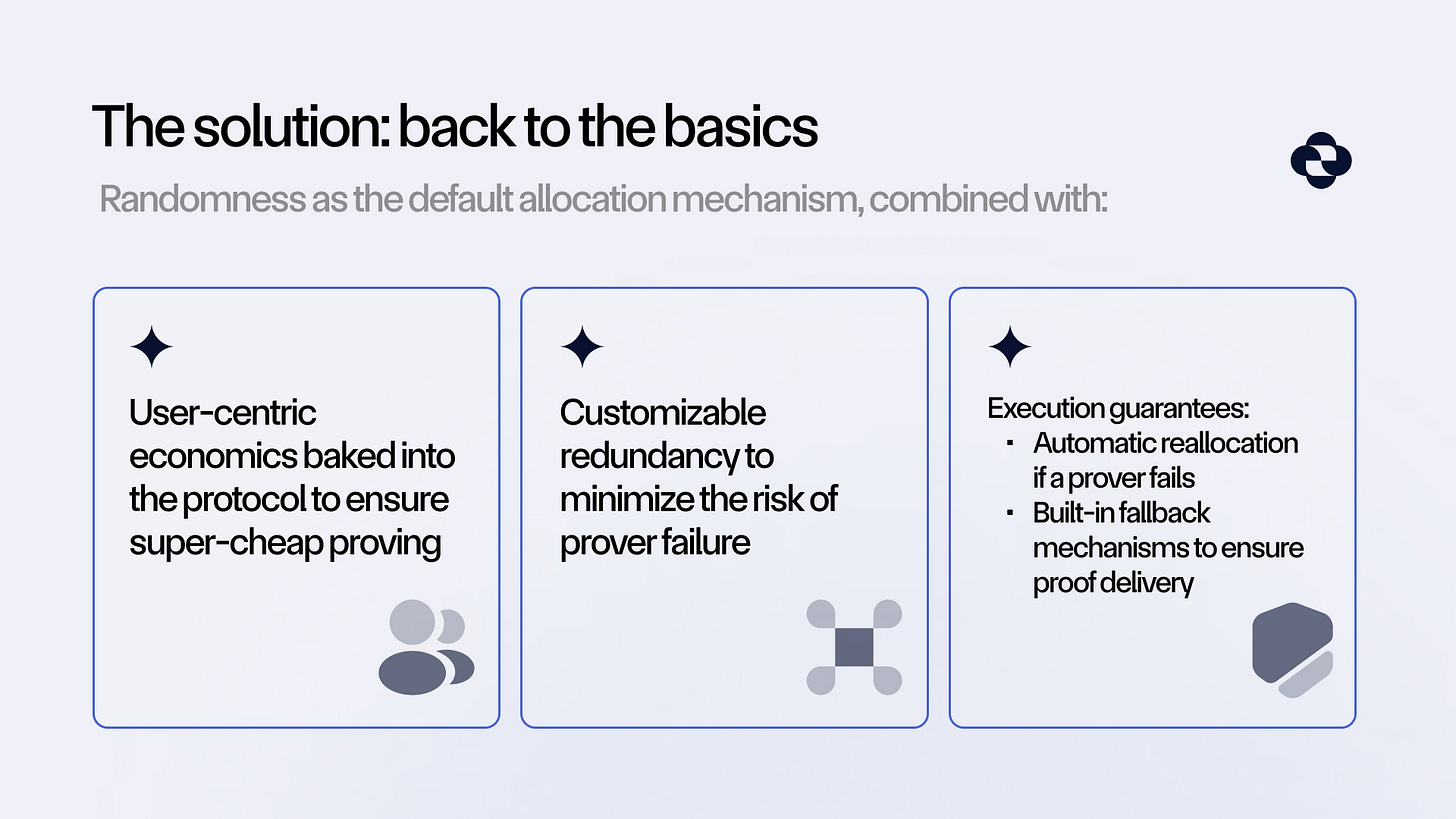

The solution: back to the basics

To find a suitable model, we need to go back to the basics and take randomness as the default mechanism for prover selection.

But we also have to add additional features:

User-centric economics: the economic design should ensure super-cheap proving for the users by default (with profitability for compute node operators), and should be baked into the protocol. This ensures that no single entity can control the cost of proving, and it remains predictable for L2s.

Customizable redundancy: users should be able to add additional redundancy (comes with higher costs) if their use case requires so, or until the proof provider proves sufficiently reliable.

Execution guarantees through:

Automatic reallocation: in case the selected prover does not accept the job explicitly, the proving workload should be automatically reallocated to a new random worker, to minimize the delay in which a prover failure becomes known.

Built-in fallback mechanism: in case of non-delivery, the protocol reassigns the job to another random worker.

Secondary fallback as last resort: if neither the initial nor the fallback prover delivers the proof, the job is open to any prover in the network to pick up and complete.

All the above should take place with no further action required from the L2 sequencer.

Note: as mentioned above earlier, we take sufficiently high economic security as a given, and do not discuss it here.

The above are such characteristics that auction-based prover networks and marketplaces do not have by default.

Does the solution exist now?

Yes.

You mold all the above features together, and you get ZkCloud.

We believe in a ZK future where proving is fast, cheap, and truly decentralized. ZkCloud has been designed with this vision in mind. It is decentralized, permissionless, and has been built to fully align with the needs of L2s when it comes to proof outsourcing.

Our production-ready prover network, Firestarter, is available now, delivering proofs. And with ZkCloud’s mainnet coming soon, we are setting the new standard for L2 proving infrastructure.

Long live ZK!

___

About ZkCloud:

ZkCloud, built by Gevulot, is the first universal proving infrastructure for ZK. Generate ZK proofs for any proof system at a fraction of the cost. Fast, cheap, and decentralized.

Learn more about ZkCloud:

Website | Docs | GitHub | Blog | X (Twitter) | Galxe Campaign | Telegram | Discord